(C-MHAD)

Introduction

Noting that there exists no public domain continuous multimodal human action dataset where inertial signals and video images are captured simultaneously and actions of interest are performed randomly among actions of non-interest, a dataset named C-MHAD is collected in this work and made available to the research community for two sets of actions of interest corresponding to smart TV gestures and transition movements.

Sensors

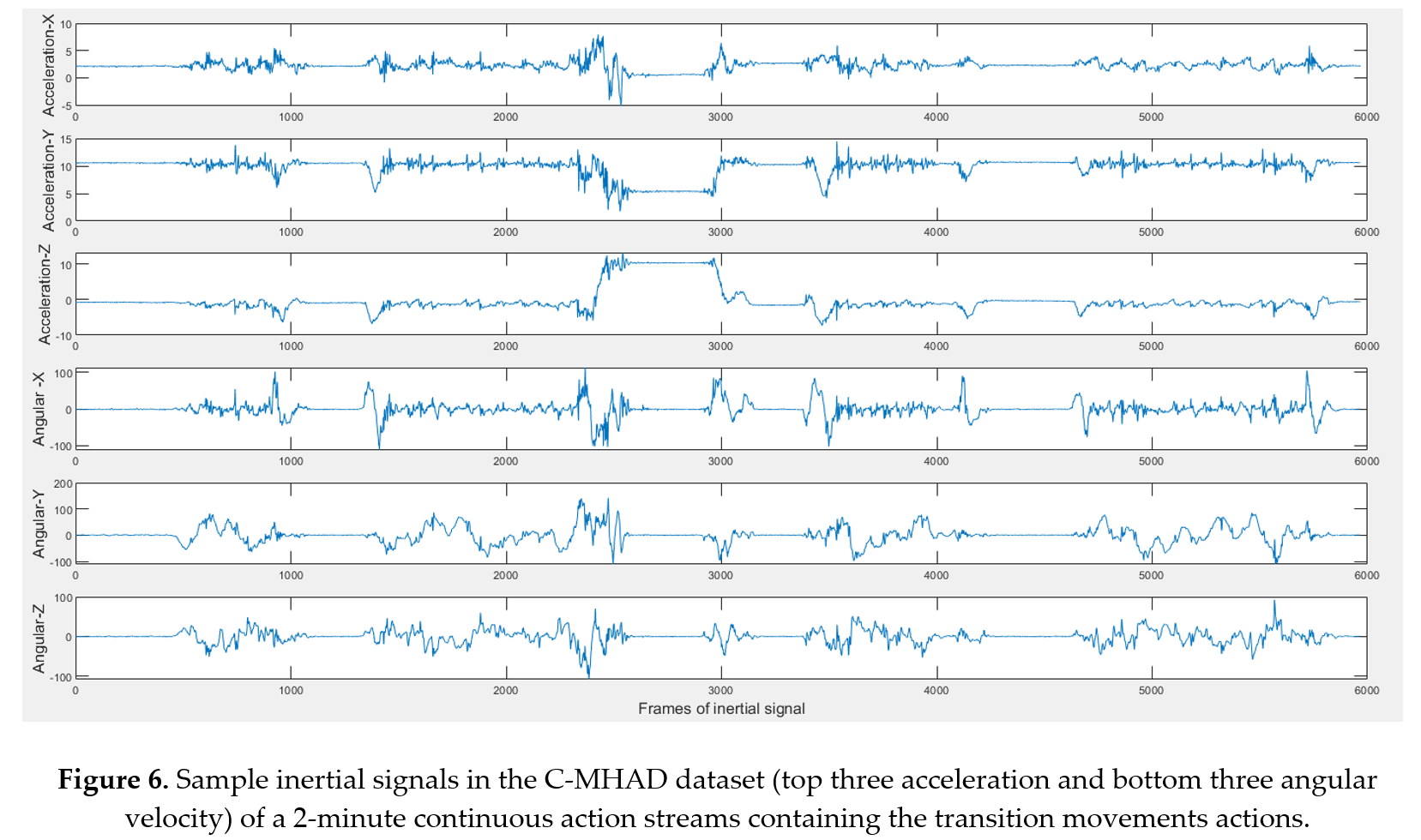

The inertial signals in this dataset consists of 3-axis acceleration signals and 3-axis angular velocity signals which were captured by the commercially available Shimmer3 wearable inertial sensor at a frequency of 50Hz on a laptop via a Bluetooth link. The videos were captured by the laptop videocam at a rate of 15 image frames per second at a resolution of 640◊480 pixels. During data capture, the image frames and the inertial signals were synchronized via a time stamp mechanism.

Each action stream in this dataset lasts for 2 minutes and corresponds to 1801 image frames and 6001 inertial signal samples. Due to the delay associated with the Bluetooth communication, 30-40 samples of the inertial data at the beginning of each action stream are not present in the dataset. Thus, before using the inertial data, depending on the number of missing samples seen in the dataset, zero samples need to be padded at the beginning of each action stream to have a total of 6001 samples.The captured inertial signals were written to an Excel file, and the video frames were saved in a .avi file. For the smart TV gesture application, the inertial sensor was worn on a subjectís right wrist, as shown in Fig. 1(a). For the transition movements application, the inertial sensor was worn on a subjectís waist, as shown in Fig. 1(b).

In total, 240 video clips (120 for the smart TV gesture application and 120 for the transition movements application) were collected from 12 subjects. For accurate recording of the data, the Avidemux video editing software was used with a time extension to see all the individual frames and record the time of the actions of interest in 10ms increments. Note that the actions of interest are manually labelled in the C-MHAD dataset. In other words, for every action of interest in an action stream, the start and end time were identified by visual inspection of all the individual image frames.

Smart TV Gesture Application

The continuous dataset for this application contains 5 actions of interest of the smart TV gestures performed by 12 subjects (10 males and 2 females). 10 continuous streams of video and inertial data, each lasting for 2 minutes, were captured for each subject. The acceleration and angular velocity signals of the Shimmer inertial sensor were streamed in real-time to a laptop via a Bluetooth link. At the same time, the videocam of the same laptop was used to capture video. The subjects moved freely in the field of view of the camera. All the gestures performed by the subjects were done by the arm wearing the inertial sensor. The 5 actions of interest in the smart TV gesture application are swipe left, swipe right, wave, draw circle clockwise, and draw circle counterclockwise. These gestures or actions are listed in Table 1.

Figure 2 shows representative image frames for each action or gesture of interest. Figure 3 shows the six inertial signals (3 accelerations and 3 angular velocities) for a sample 2-minute action stream. The subjects performed the actions or gestures of interest in random order during an action stream while arbitrarily performing their own actions of non-interest in-between the actions or gestures of interest.

Transition Movements Application

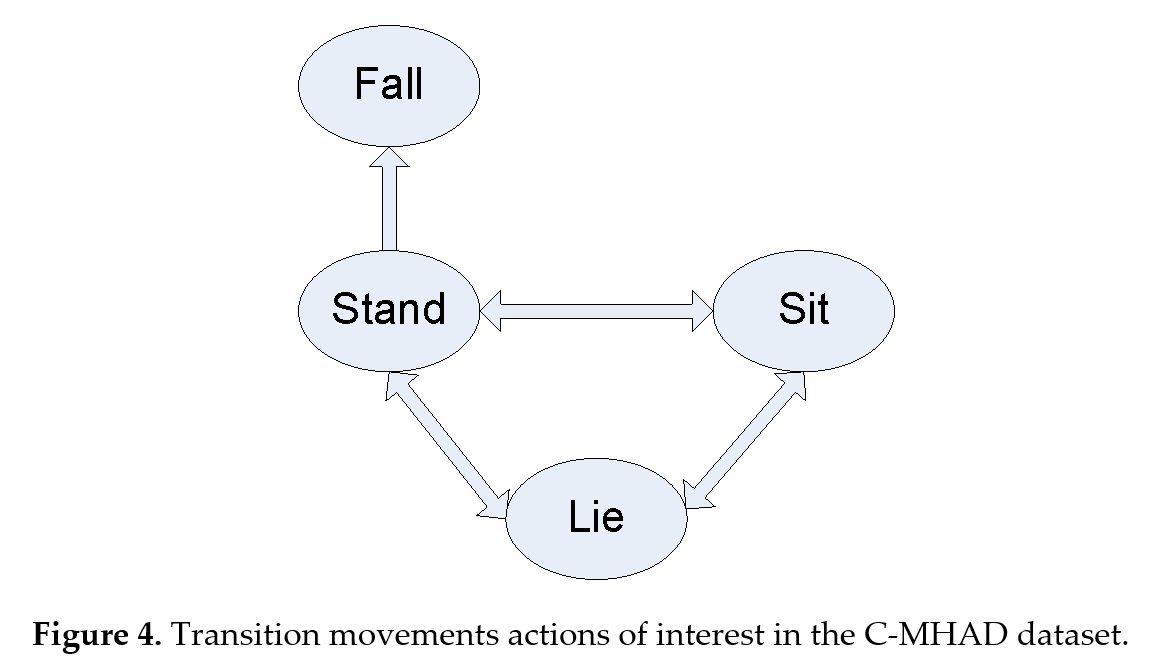

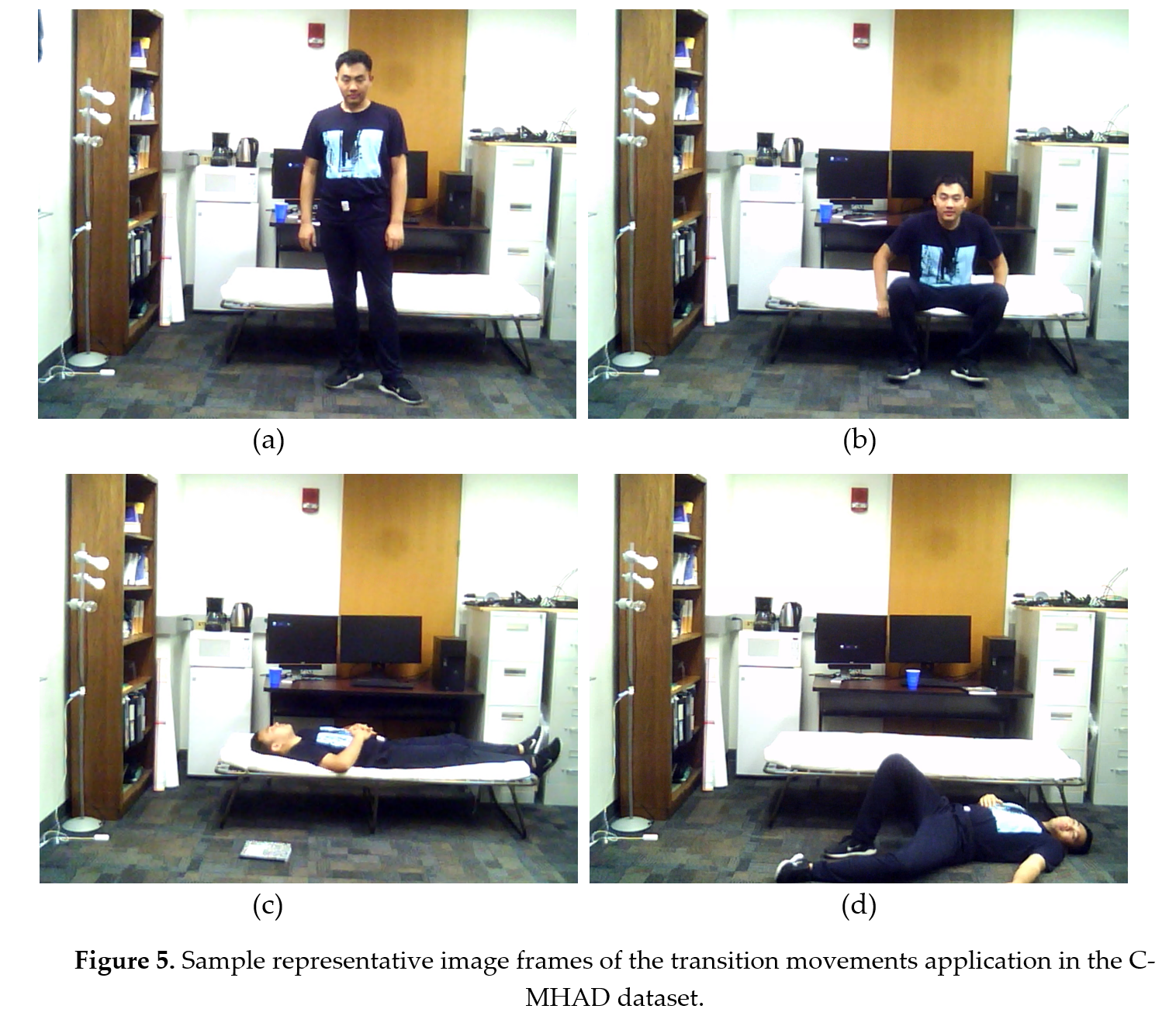

The continuous dataset for this application consists of 7 actions of interest reflecting transition movements performed by the same 12 subjects (10 males and 2 females) who performed the smart TV gestures. As depicted in Fig. 4, these actions of interest consist of stand to sit, sit to stand, sit to lie, lie to sit, lie to stand, stand to lie, and stand to fall. These transition movements take place between four positions of stand, sit, sleep, and fall as illustrated in Fig. 5. 10 continuous streams of video and inertial data, each lasting for 2 minutes, were captured for each subject. This time the Shimmer inertial sensor was worn around the waist. The subjects performed the transitions movements in random order during an action stream while arbitrarily performing their own actions of non-interest in-between the actions of interest. A bed was used for the lying down transition movements while the falling down was done from the standing position to the floor. Figure 6 shows the six inertial signals (3 accelerations and 3 angular velocities) for a sample 2-minute action stream of the transition movements application.

Download

The dataset files can be downloaded here. A Python package and a MATLAB package are also provided which allow one to view and use the data from the two modalities.

Citation

The following paper provides more details on the C-MHAD dataset. It is requested that this paper is cited when using the dataset.[1] H. Wei, P. Chopada, and N. Kehtarnavaz, "C-MHAD: Continuous Multimodal Human Action Dataset of Simultaneous Video and Inertial Sensing," to appear in Sensors, 2020.

To detect and recognize actions of interest in continuous actions streams, the following paper provides a solution:

[2] H. Wei, and N. Kehtarnavaz, "Simultaneous Utilization of Inertial and Video Sensing for Action Detection and Recognition in Continuous Action Streams," IEEE Sensors Journal, vol. 20, pp. 6055-6063, June 2020.

Contact

For questions regarding the dataset, please contact Haoran Wei (Haoran.Wei@utdallas.edu) or kehtar@utdallas.edu.